Prompt Injection Detection & Response

(PIDR)

PIDR is the first framework to operationalize AI incident response—protecting systems before, during, and after an attack while continuously making them smarter and more resilient.

-

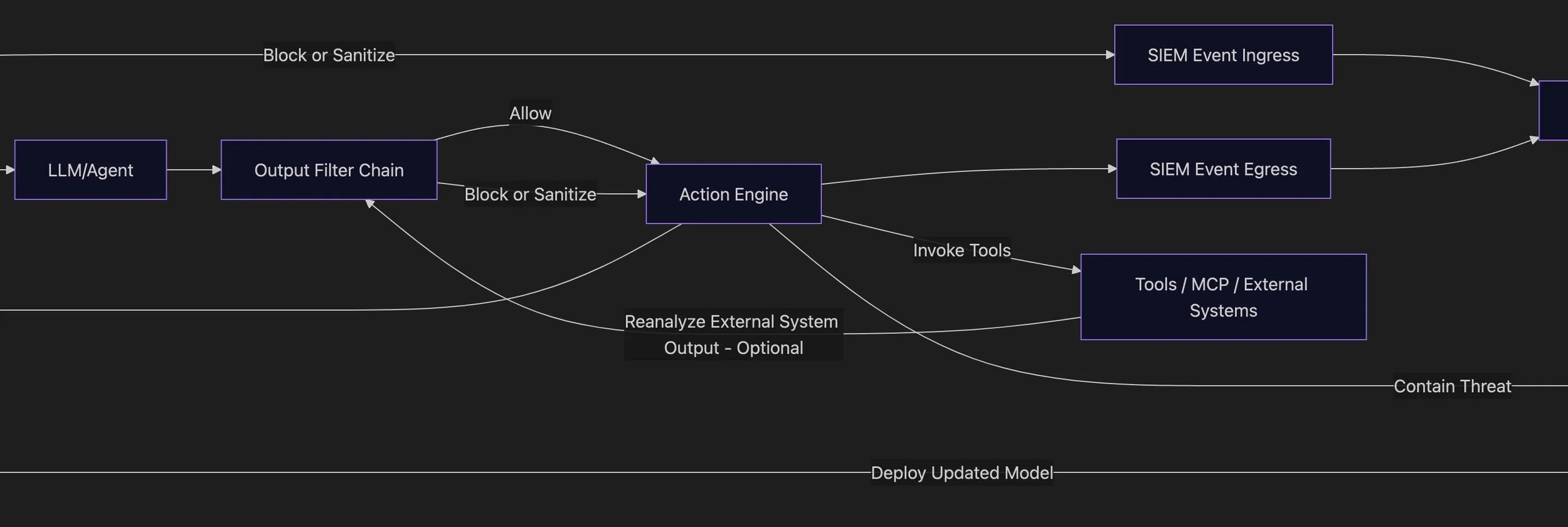

While most “AI safety” tooling ends at basic guardrails or content moderation, PIDR goes further—it treats misuse as a live incident, not a static rule violation. Each blocked prompt, unsafe output, or leaked token is logged as a structured security event, correlated in real time with broader SOC telemetry.

PIDR doesn’t wait for a postmortem—it responds in the moment: quarantining sessions, tightening policies, and triggering automated containment workflows. This IR-first mindset transforms passive defense into active resilience, making AI systems behave like fully instrumented security endpoints rather than opaque black boxes.

-

Traditional models stagnate after deployment, relying on manual retraining cycles and static filters. PIDR introduces a governed feedback loop: analysts label detections through a balanced, sliding-window curation system; those labels feed adaptive retraining pipelines; and the updated detectors are redeployed with version tracking for reproducibility and audit.

It’s the first framework to embed continuous learning as a compliance control—turning every incident into a data point that strengthens future defenses. In other words, PIDR learns from attacks the same way SOC analysts do, but at machine speed.

-

AI operations have long been a blind spot in security visibility. PIDR changes that by emitting SIEM-native telemetry for every decision and outcome—complete with timestamps, correlation IDs, policy versions, and ATT&CK mappings.

This data plugs directly into existing enterprise tools like Splunk, Sentinel, and QRadar, giving security teams the same level of insight into LLM behavior that they expect from EDR or network appliances.

PIDR effectively turns language models into observable assets, allowing organizations to measure, audit, and defend AI activity with the same rigor they apply to any other critical system.

-

Responsible AI and cybersecurity have evolved in isolation—until now. PIDR’s dual-gate design merges input validation, output filtration, and automated incident handling into a single coherent framework.

The result is a platform that doesn’t just protect data or block prompts; it secures the entire AI ecosystem—users, agents, tools, and integrations. Whether responding to a prompt-injection attack, a canary leak, or a policy bypass, PIDR enforces consistent governance across every layer.

It’s not an add-on or wrapper; it’s the missing layer of enterprise AI infrastructure that fuses reliability, accountability, and defense into the core of model operations.